Striking a Balance: Privacy, Data, and Ethical Considerations in AI

Dr. Manjeet Rege on the need for the responsible development and use of AI

By Evelyn Hoover

Striking a Balance: Privacy, Data and Ethical Considerations in AI

Dr. Manjeet Rege on the need for the responsible development and use of AI

By Evelyn Hoover

With such powerful AI tools that can generate text, images, code and more come the greater responsibility to ensure they are developed and used ethically.

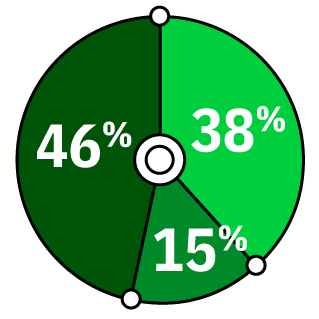

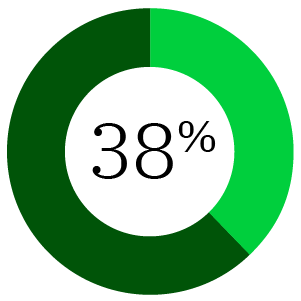

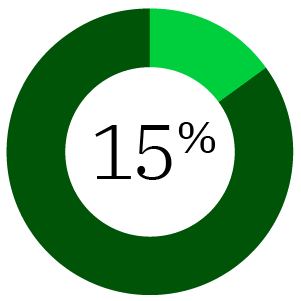

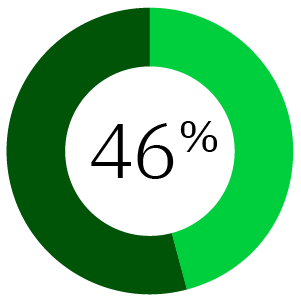

A Pew Research survey found that Americans are concerned about the ethical use of AI. “On balance, a greater share of Americans say they are more concerned than excited about the increased use of artificial intelligence in daily life (38%) than say they are more excited than concerned (15%). Many express ambivalent views: 46% say they are equally concerned and excited,” the survey concluded.

How Americans feel about the increased use of artificial intelligence in daily life

More concerned than excited

More excited than concerned

Equally concerned and excited

How Americans feel about the increased use of artificial intelligence in daily life

More concerned than excited

More excited than concerned

Equally concerned and excited

0

%

75% of companies use AI to screen resumes from job applicants, according to CNBC.

With a rational, risk-managed approach, mainframe teams put themselves on the fast track to the normalized AppDev vision.

The Ethics of AI Bias

Software engineering has changed so that it’s not just about programming, it’s about engineering intelligent software with AI embedded in it. “That’s why responsible development and AI use is so important—AI is integrated into our daily lives,” explains Dr. Manjeet Rege from the University of St. Thomas in St. Paul, Minnesota. “It is essential to ensure that these systems are developed and used responsibly.”

Bias, which can be introduced while AI models are trained, can cause discrimination. For example, 75% of companies use AI to screen resumes from job applicants, according to CNBC. If fewer female resumes are used to train the models, the AI can begin to develop that bias.

“You want to hire people based on merit. … If you have that initial AI scanner giving preferential treatment to male candidates, you will never end up hiring based on merit,” says Rege, professor and chair of the Department of Software Engineering and Data Science, and director of the Center for Applied Artificial Intelligence at the university.

Privacy protection is another issue as the AIs rely on huge large data sets, which can include sensitive information on people. This gives rise to the need for transparency in how AI systems make decisions.

“If I see a bias, if proper representation of different, let’s say, skin colors in the image face recognition is not there, somebody has to take ownership of that and that is where accountability comes in,” Rege adds.

Accountability rests with both the AI developers to ensure the models are created with equal representations of different genders and races. Responsibility also lies with the middle tier companies that may be deploying AI systems to their customers. The companies can choose which AI system to buy and have a responsibility to vet the solutions.

In this case, the procurement manager should be asking the solution provider about transparency in terms of what data the model was trained on, Rege asserts.

A classic example of how this can go wrong occurred in 2019 with Amazon’s Rekognition facial recognition software, which was developed to help law enforcement officials analyze facial images. The AI solution came under fire for its bias when it came to analyzing images of people with darker skin tones. The misstep by Amazon led to the formation of Black in AI, a nonprofit that seeks to increase the presence of Black people in the field of AI.

4 Steps to Combat AI Bias

Increase diversity of data collection

⟶

Because data is like the food for an AI system, Rege says, it’s important to make sure your AI systems are trained on a representative population.

Increase diversity in AI development

⟶

Diversity shouldn’t end with the data itself. The teams of people who train the models should also be diverse. Diverse teams are more likely to recognize and question biases and assumptions that might otherwise be overlooked.

Have a developed feedback mechanism

⟶

Stakeholder feedback is vital and should include stakeholders from diverse backgrounds during the development of the AI models.

Regular auditing and monitoring

⟶

As people’s demographics and habits change, the AI models should be audited. By monitoring the AI systems regularly, developers can identify when the models should be retrained.

Access Hundreds of Solutions and Services From Partners and ISVs Worldwide

Start your research with TechChannel’s 2024 IBM Z Solutions Directory. Full of hardware, software solutions and accessories listings, the Solutions Directory is your first stop when you’re looking for new tools to solve your business challenges. Filter by OS, company, product or category to find the solution that’s best for your needs.

FAQs:

How do I access the Online Solutions Directory?

Where can I get a magazine copy?

Can I download a copy?

Yes, you can access and download the PDF version here (scroll to the bottom).

Who’s participating in the 2024 IBM Z Solutions Directory?

We have over 30 partners across the worldwide ecosystem. Find out which companies are included here.

How many products are listed in the IBM Z Solutions Directory

The 2024 Solutions Directory features over 130 cutting-edge IBM Z hardware, services and software solutions. Find them here.

Is there trending information available?

Yes, the Solutions Directory also includes expert insights and Partner Perspectives from industry leaders, so you can keep up with the latest IT innovations.

Can I be sure the information is accurate and relevant?

The Solutions Directory is in its 24th publication year by TechChannel and MSPC, previously IBM Systems magazine, and the same great care is poured into its publication every year.

I’m a business partner. How can I include my products and services in the Solutions Directory?

The Solutions Directory is an opportunity for you to showcase your company’s products and services to a relevant worldwide audience. Contact Lisa Kilwein to get started!

Balancing Privacy and Data in AI Systems

Increasingly, the AI systems' need for data on which to train is running contrary to individuals’ need for privacy.

Rege suggests four ways to provide the systems with data while protecting privacy: data anonymization, data minimization, federated learning and synthetic data.

Data anonymization

As data is collected, what Rege refers to as “noise” can be added to it. The process begins by removing identifying information. Then some data is randomized. So, for example, with census data, ages could be randomly increased or decreased so that it becomes unidentifiable.

Data minimization

By collecting only the minimum amount of data needed for the task at hand, the AI models wouldn’t be exposed to data they don’t need.

Federated learning

Spreading data across locations and devices federates the AI model training. Federated learning provides increased privacy and data security because the data isn’t transported.

Data anonymization

Synthetic data

It’s possible to train AI models on fake data, which is generated by AI models, rather than training it on actual data.

The Future of AI

Rege sees a need for education and regulation. The University of St. Thomas is among the first to begin offering master’s degrees in AI.

“As humans, we are developing AI and we are doing it for humans,” Rege says. “Humans keeping humans in the loop is extremely important.

The need for human involvement in AI begins with the training models but extends all the way through to the deployment of AI solutions.

Oversight is also vital. Several countries have adopted different approaches to AI oversight:

- The U.S. took what Rege calls an “market-drive approach” until the Biden Administration’s recent executive order.

- The UK adopted what its government calls a “pro-innovation” approach, which Rege says focuses on protecting citizens.

- China took a governmental control approach, passing laws targeted specifically at regulating generative AI.

The U.S. executive order was designed to get the process started, according to Rege. “I do hope the U.S. plays a larger role in terms of regulation here,” he adds. “We saw what happened with social networks in the past where the U.S. did not do anything about it.”

To that end, Rege expects additional regulation and standardization focusing on ethical AI development and deployment on both the national and international levels. Data governance, including who owns what data, how it’s being used and who benefits from it, will be key focuses in the coming year.

He also foresees a greater emphasis on human-centric AI. “There is research out there that shows that human plus AI can lead to a lot more efficiency than what just a human can do,” he says. For example, ARK Invest’s 2023 Big Ideas report shows a 55% increase in coding efficiency gains using Github Copilot.

0

%

55% increase in coding efficiency gains using Github Copilot

As humans, we are developing AI and we are doing it for humans. Humans keeping humans in the loop is extremely important.